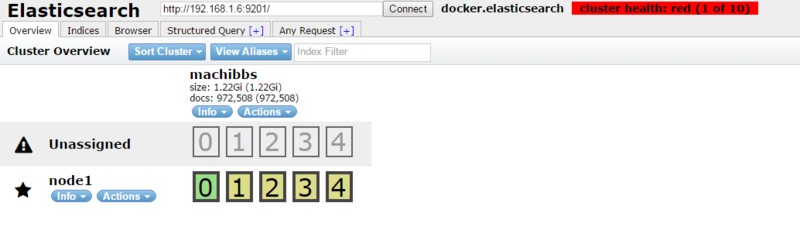

大量データを Dockerコンテナで動かしている Elasticsearch に突っ込んだときエラー

まちBBS の スレッドをElasticsearch に突っ込んでみる - moremagicの日記の続き。

ログを見てみたら たくさんのエラーが出ていました。

どうも突っ込むJSONデータのフォーマットエラーがあったよう。

Elasticsearchのせいじゃなかったのね・・・

なのでスクリプトを修正(昨日の記事を直接修正してます)もう一回実行してみました。

_bulk API の返り値がこんなError。

{"create":{"_index":"machibbs","_type":"osaka","_id":"AVSXe-VrHxqhaPg4y2Io","status":503,"error":{"type":"unavailable_shards_exception","reason":"[machibbs][1] primary shard is not active Timeout: [1m], request: [BulkShardRequest to [machibbs] containing [98] requests]"}ログはこんな感じ

/var/log/elasticsearch/docker.elasticsearch.log

[2016-05-09 21:47:55,668][WARN ][cluster.action.shard ] [node1] [machibbs][3] received shard failed for target shard [[machibbs][3], node[4GX96VHMRgSTGFCxEjpHyQ], [P], v[6], s[INITIALIZING], a[id=0uxOKoTRTlSBmSsFPJYgEQ], unassigned_info[[reason=ALLOCATION_FAILED], at[2016-05-09T21:35:39.156Z], details[engine failure, reason [create], failure IOException[No space left on device]]]], indexUUID [TANaa136Tcqys6d-Xv1wqQ], message [master {node1}{4GX96VHMRgSTGFCxEjpHyQ}{172.18.0.2}{172.18.0.2:9300} marked shard as initializing, but shard is marked as failed, resend shard failure]

[2016-05-09 21:47:55,669][WARN ][indices.cluster ] [node1] [[machibbs][3]] marking and sending shard failed due to [engine failure, reason [create]]

java.io.IOException: No space left on device

at sun.nio.ch.FileDispatcherImpl.write0(Native Method)

at sun.nio.ch.FileDispatcherImpl.write(FileDispatcherImpl.java:60)

at sun.nio.ch.IOUtil.writeFromNativeBuffer(IOUtil.java:93)

at sun.nio.ch.IOUtil.write(IOUtil.java:65)

at sun.nio.ch.FileChannelImpl.write(FileChannelImpl.java:211)

at org.elasticsearch.common.io.Channels.writeToChannel(Channels.java:208)

at org.elasticsearch.index.translog.BufferingTranslogWriter.flush(BufferingTranslogWriter.java:84)

at org.elasticsearch.index.translog.BufferingTranslogWriter.add(BufferingTranslogWriter.java:66)

at org.elasticsearch.index.translog.Translog.add(Translog.java:545)

at org.elasticsearch.index.engine.InternalEngine.innerCreateNoLock(InternalEngine.java:440)

at org.elasticsearch.index.engine.InternalEngine.innerCreate(InternalEngine.java:378)

at org.elasticsearch.index.engine.InternalEngine.create(InternalEngine.java:346)

at org.elasticsearch.index.shard.TranslogRecoveryPerformer.performRecoveryOperation(TranslogRecoveryPerformer.java:183)

at org.elasticsearch.index.shard.TranslogRecoveryPerformer.recoveryFromSnapshot(TranslogRecoveryPerformer.java:107)

at org.elasticsearch.index.shard.IndexShard$1.recoveryFromSnapshot(IndexShard.java:1584)

at org.elasticsearch.index.engine.InternalEngine.recoverFromTranslog(InternalEngine.java:238)

at org.elasticsearch.index.engine.InternalEngine.<init>(InternalEngine.java:174)

at org.elasticsearch.index.engine.InternalEngineFactory.newReadWriteEngine(InternalEngineFactory.java:25)

at org.elasticsearch.index.shard.IndexShard.newEngine(IndexShard.java:1515)

at org.elasticsearch.index.shard.IndexShard.createNewEngine(IndexShard.java:1499)

at org.elasticsearch.index.shard.IndexShard.internalPerformTranslogRecovery(IndexShard.java:972)

at org.elasticsearch.index.shard.IndexShard.performTranslogRecovery(IndexShard.java:944)

at org.elasticsearch.index.shard.StoreRecoveryService.recoverFromStore(StoreRecoveryService.java:241)

at org.elasticsearch.index.shard.StoreRecoveryService.access$100(StoreRecoveryService.java:56)

at org.elasticsearch.index.shard.StoreRecoveryService$1.run(StoreRecoveryService.java:129)

at java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1142)

at java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:617)

at java.lang.Thread.run(Thread.java:745)

[2016-05-09 21:47:55,669][WARN ][cluster.action.shard ] [node1] [machibbs][3] received shard failed for target shard [[machibbs][3], node[4GX96VHMRgSTGFCxEjpHyQ], [P], v[6], s[INITIALIZING], a[id=0uxOKoTRTlSBmSsFPJYgEQ], unassigned_info[[reason=ALLOCATION_FAILED], at[2016-05-09T21:35:39.156Z], details[engine failure, reason [create], failure IOException[No space left on device]]]], indexUUID [TANaa136Tcqys6d-Xv1wqQ], message [engine failure, reason [create]], failure [IOException[No space left on device]]

java.io.IOException: No space left on device

at sun.nio.ch.FileDispatcherImpl.write0(Native Method)

at sun.nio.ch.FileDispatcherImpl.write(FileDispatcherImpl.java:60)

at sun.nio.ch.IOUtil.writeFromNativeBuffer(IOUtil.java:93)

at sun.nio.ch.IOUtil.write(IOUtil.java:65)

at sun.nio.ch.FileChannelImpl.write(FileChannelImpl.java:211)

at org.elasticsearch.common.io.Channels.writeToChannel(Channels.java:208)

at org.elasticsearch.index.translog.BufferingTranslogWriter.flush(BufferingTranslogWriter.java:84)

at org.elasticsearch.index.translog.BufferingTranslogWriter.add(BufferingTranslogWriter.java:66)

at org.elasticsearch.index.translog.Translog.add(Translog.java:545)

at org.elasticsearch.index.engine.InternalEngine.innerCreateNoLock(InternalEngine.java:440)

at org.elasticsearch.index.engine.InternalEngine.innerCreate(InternalEngine.java:378)

at org.elasticsearch.index.engine.InternalEngine.create(InternalEngine.java:346)

at org.elasticsearch.index.shard.TranslogRecoveryPerformer.performRecoveryOperation(TranslogRecoveryPerformer.java:183)

at org.elasticsearch.index.shard.TranslogRecoveryPerformer.recoveryFromSnapshot(TranslogRecoveryPerformer.java:107)

at org.elasticsearch.index.shard.IndexShard$1.recoveryFromSnapshot(IndexShard.java:1584)

at org.elasticsearch.index.engine.InternalEngine.recoverFromTranslog(InternalEngine.java:238)

at org.elasticsearch.index.engine.InternalEngine.<init>(InternalEngine.java:174)

at org.elasticsearch.index.engine.InternalEngineFactory.newReadWriteEngine(InternalEngineFactory.java:25)

at org.elasticsearch.index.shard.IndexShard.newEngine(IndexShard.java:1515)

at org.elasticsearch.index.shard.IndexShard.createNewEngine(IndexShard.java:1499)

at org.elasticsearch.index.shard.IndexShard.internalPerformTranslogRecovery(IndexShard.java:972)

at org.elasticsearch.index.shard.IndexShard.performTranslogRecovery(IndexShard.java:944)

at org.elasticsearch.index.shard.StoreRecoveryService.recoverFromStore(StoreRecoveryService.java:241)

at org.elasticsearch.index.shard.StoreRecoveryService.access$100(StoreRecoveryService.java:56)

at org.elasticsearch.index.shard.StoreRecoveryService$1.run(StoreRecoveryService.java:129)

at java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1142)

at java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:617)

at java.lang.Thread.run(Thread.java:745)

[2016-05-09 21:47:58,308][WARN ][monitor.jvm ] [node1] [gc][young][26744][7567] duration [1.4s], collections [1]/[2s], total [1.4s]/[8.2m], memory [198.8mb]->[71.9mb]/[1007.3mb], all_pools {[young] [15.4mb]->[1.7mb]/[133.1mb]}{[survivor] [16.6mb]->[13.8mb]/[16.6mb]}{[old] [166.6mb]->[56.3mb]/[857.6mb]}

[2016-05-09 21:48:04,485][INFO ][cluster.routing.allocation.decider] [node1] rerouting shards: [one or more nodes has gone under the high or low watermark]

[2016-05-09 21:49:35,712][INFO ][cluster.routing.allocation.decider] [node1] low disk watermark [85%] exceeded on [4GX96VHMRgSTGFCxEjpHyQ][node1][/var/lib/elasticsearch/docker.elasticsearch/nodes/0] free: 1.4gb[14.6%], replicas will not be assigned to this node

[2016-05-09 21:50:05,773][INFO ][cluster.routing.allocation.decider] [node1] low disk watermark [85%] exceeded on [4GX96VHMRgSTGFCxEjpHyQ][node1][/var/lib/elasticsearch/docker.elasticsearch/nodes/0] free: 1.3gb[13.5%], replicas will not be assigned to this node

[2016-05-09 21:50:36,737][INFO ][cluster.routing.allocation.decider] [node1] low disk watermark [85%] exceeded on [4GX96VHMRgSTGFCxEjpHyQ][node1][/var/lib/elasticsearch/docker.elasticsearch/nodes/0] free: 1.1gb[11.8%], replicas will not be assigned to this node

[2016-05-09 21:51:09,312][INFO ][cluster.routing.allocation.decider] [node1] low disk watermark [85%] exceeded on [4GX96VHMRgSTGFCxEjpHyQ][node1][/var/lib/elasticsearch/docker.elasticsearch/nodes/0] free: 1gb[10.3%], replicas will not be assigned to this node

[2016-05-09 21:51:41,695][WARN ][cluster.routing.allocation.decider] [node1] high disk watermark [90%] exceeded on [4GX96VHMRgSTGFCxEjpHyQ][node1][/var/lib/elasticsearch/docker.elasticsearch/nodes/0] free: 961.6mb[9.6%], shards will be relocated away from this node

[2016-05-09 21:51:42,954][INFO ][cluster.routing.allocation.decider] [node1] rerouting shards: [high disk watermark exceeded on one or more nodes]

HDDの使用量 は余裕あるよな・・・

root@ubuntu:~# df -h Filesystem Size Used Avail Use% Mounted on udev 491M 0 491M 0% /dev tmpfs 100M 3.2M 97M 4% /run /dev/mapper/ubuntu--vg-root 26G 12G 14G 46% / tmpfs 497M 0 497M 0% /dev/shm tmpfs 5.0M 0 5.0M 0% /run/lock tmpfs 497M 0 497M 0% /sys/fs/cgroup /dev/sda1 472M 29M 419M 7% /boot root@ubuntu:~# docker exec -ti node1 /bin/bash [root@node1 elasticsearch]# df -h Filesystem Size Used Avail Use% Mounted on /dev/mapper/docker-252:0-391609-80642fad3ea0acc74e9299473903de85a619c4d4a55f8d9da820afbfbda8e877 9.8G 8.6G 715M 93% / tmpfs 497M 0 497M 0% /dev tmpfs 497M 0 497M 0% /sys/fs/cgroup /dev/mapper/ubuntu--vg-root 26G 12G 14G 46% /etc/hosts shm 64M 0 64M 0% /dev/shm

あれ?コンテナ内でのHDD使用量が 93% ?

どうもDockerコンテナ内のHDD空き容量不足のようです。

Dockerコンテナに HDDの使用量の制限があるのかな?

というわけでDocker の HDDマウント時の挙動について調べてみました。

■HDD マウント方法の挙動まとめ

Ver 1.8.0

コンテナ起動時 / と /etc/hosts に

HostOSのHDDをフルに使えるようマウントしている

Ver 1.11.1

コンテナ起動時、オプションを指定しないと デフォルトで10G を / にマウント。

/etc/hosts には今まで通りHostOSのHDDをフルにマウント

Docker の verup したら 起動時のHDD容量オプションの指定は必須なんですね。

知らなかった。

■再チャレンジ

Elasticsearchコンテナを Data Volume を作成して起動するように修正。

これでHDD不足は解決できるはず。

root@ubuntu:~# docker run -d -p 9201:9200 --net elastic -h node1 --name node1 -v /var/lib/elasticsearch moremagic/elasticsearch

続きは後日。

■資料

http://www.slideshare.net/HommasSlide/docker-52965982

moremagic

2016-05-10